Projects

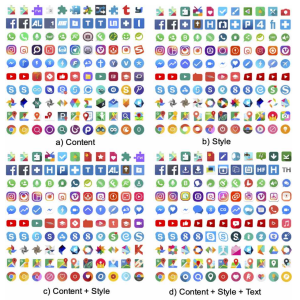

Deep Learning-based Counterfeit Mobile App Detection in Google Play Store

This research project, conducted by Data61 at CSIRO, focused on the detection of counterfeit mobile apps in the Google Play Store using deep learning techniques. Neural embeddings were generated for over 1.2 million apps by analyzing their visual and textual features with convolutional neural networks and Word2Vec or Doc2Vec models. The objective was to identify and examine apps that imitated the most downloaded ones, checking for signs of malware, misuse of dangerous permissions, and third-party advertising libraries. The aim was to enhance the reliability of apps available to users by detecting and analyzing fraudulent practices in the Google Play Store.

Forbes Article Usyd News Paper

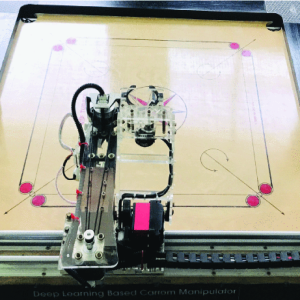

Deep Learning-based Autonomous Carrom Bot

This project is a realization of an automated smart carrom-playing robot that continuously analyzes the board configuration using a vision feed and image processing and plays a series of best possible shots to complete a game. This leverages the knowledge of reinforcement learning in implementing strategic gameplay.

Demo Game play Paper

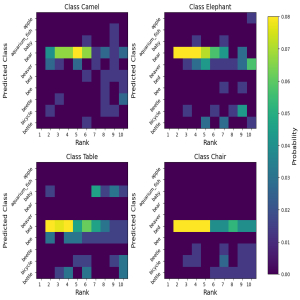

Class Ranking-based Out-of-Distribution Detection

Out-of-distribution (OOD) detection remains a key challenge preventing the rollout of critical AI technologies like autonomous vehicles into the mainstream, as classifiers trained on in-distribution (ID) data are unable to gracefully handle OOD data. This project introduces a novel approach for OOD detection based on the class ranking information implicitly learned by deep neural networks during pre-training.

Post-hoc Training-aware

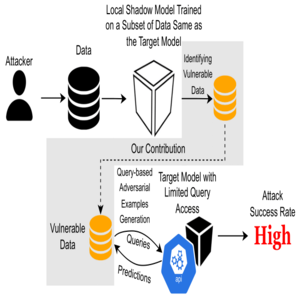

Quantifying and Exploiting the Adversarial Vulnerability of Data Against Deep Neural Networks

This research investigates the vulnerability of inputs in an adversarial setting and demonstrates that certain samples are more susceptible to adversarial perturbations compared to others. Specifically, a simple yet effective approach is employed to quantify the adversarial vulnerability of inputs, which relies on the clipped gradients of the loss with respect to the input (i.e., ZGP). Furthermore, we identify a novel black-box attack pipeline that enhances the efficiency of conventional query-based black-box attacks and shows that input pre-filtering based on ZGP can boost the attack success rates, particularly under low perturbation levels.

Paper